Teaching AI to Kids With Pranath Fernando

By Shannon Edwards

I write a substack about families and AI and was lucky to recently join a fellow AI writer, Pranath Fernando on his podcast exploring the more sociological side of AI.

I took the opportunity, post podcast, to probe a bit more for Pranath’s thoughts on how we should prepare kids and approach the future. I was delighted by his answer and hope families find his perspective helpful.

The Skills Needed to Tackle AI

SE: I often tell families that critical thinking skills are paramount to the technical in addressing the future of AI. What are your thoughts?

PF: To explain my thinking on this one, a story:

On this question, I’ve been greatly influenced by the thinking of Youval Harari for quite some time. On this question, he believes education is in crisis because AI is moving so fast that it’s not clear what we should be teaching kids.

I then discovered a critique of Harari’s position by academic Josh Brake, so I got into a debate with him on the subject. I ended up finding his arguments so persuasive that he changed my thinking completely. He made a compelling and heartfelt argument against Harari's point, saying instead that we do know what to teach kids. And that it has never been more important to teach kids virtue, values, critical thinking skills and more. Josh’s arguments also hold a lot of practical merit, these are not only the kind of things that are not likely to be automated by AI any time soon, and are essential qualities if we want to nurture a healthy society.

As he rightly says, education is not only or mainly about teaching skills, but values and how to think.

But I do also recognize that some degree of practical understanding of how current AI works can be very useful, even if its due to change.

First, because at least you’ll be able to understand how it works now to use it now. Second, because it will give you a better idea of what direction it could go next. Third, and most importantly, because learning how to use current AI technology such as ChatGPT, even to a limited degree, helps you better understand it.

AI is not passive, such as via text books, it’s active, and interactive with you - thats unprecedented.

So I think, as well as critical thinking skills, some practical familiarity with how current AI tools work can be useful, even though, yes, these will likely change. A few schools are really leading the way on innovating on how best to help kids learn about AI.

SE: Have you completely changed your thinking on how kids should/can learn about AI?

PF: While I still have a high regard for much of what Harari has to say on many issues, I now look back and don’t agree that we are as helpless as he suggests regarding kids and AI education.

He believes that skill set requirements are changing so fast we don’t know what will be relevant to teach. But I now believe that this is not a reason to avoid AI, but instead a reason to expose children and let them experience the change. And then at the same time skills not specific to current AI will be likely to endure, such as critical thinking, virtue, values, morality, and ethics.

SE: You do have a technical background, what made you focus on the more societal piece of AI? Presumably you'd consider yourself someone with a high EQ, which isn't a an attribute celebrated in the STEM world, what's been your journey and makes you different?

PF: I have had several different career paths, both in the arts and sciences, at different points in my life. I suppose they got compartmentalised and separated in many ways, even though they ended up leaking into each other, inevitably, which is not always welcome in disciplines that tend to think of interdisciplinary approaches as more a theory than a practice.

I have separate bachelor’s degrees in computer science & contemporary dance, a master’s degree in cognitive science, a postgraduate diploma in somatic studies, and I completed my first year of a PhD in Artificial Intelligence. I am also a qualified reflexologist.

I’ve also undergone various other trainings in personal development, relationships, and psychology, for example attachment theory, and parts work/shadow work.

I actually used to be embarrassed about all of these different paths, because I didn’t feel that I fit anywhere. It has only been in the last couple of years that I’ve wondered if these different paths might actually be helpful to each other.

SE: What was the last technical job you had and how did it evolve?

PF: My last technical role was at an AI startup initially as a data scientist. it was a fast-paced, crazy, and very stressful environment — but on the cutting edge of AI.

I ended up transitioning to a more of a consultancy role almost by accident. But it soon become clear that I was able to explain the technical concepts in a way people could more easily understand and then consult on strategy.

Employers who mentioned this ability I had, would sort of do it in passing. Because when you are in a technical role, you are mainly valued and rewarded for what you produce technically, such as the code. Most employers still don’t value ’soft skills’ in technical positions, so it was never really recognised formally by either my previous employers or even myself. It always seemed a nice-to-have that I could explain things in plain english to clients or non-technical stakeholders. But ultimately no one saw this skill as central or essential to my technical role.

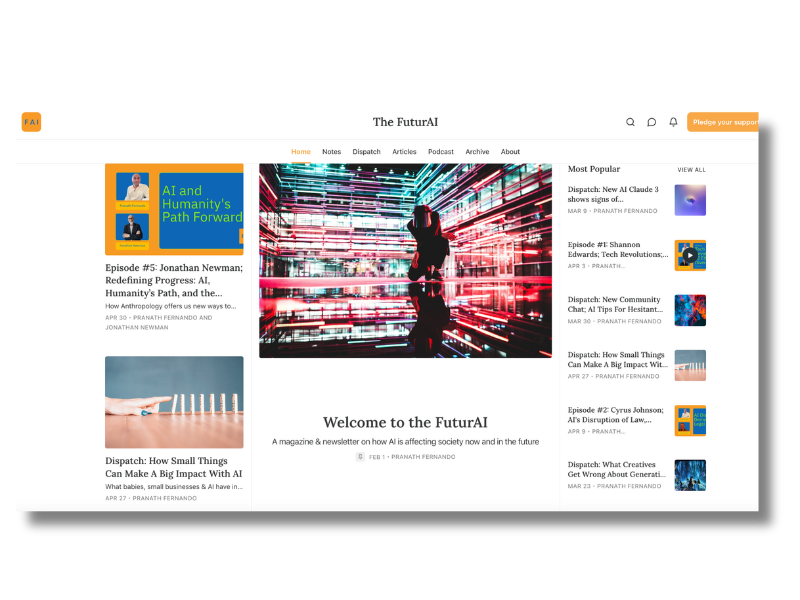

More recently, I’ve channeled my range of skill into my new online business efforts including a newsletter, podcast and, soon, online courses, intended to focus on explaining AI and how that might affect society in a way people can understand but that is also technically accurate.

I wish there were more people that crossed divides these vertical and career divides. We have so much to learn from different perspectives, different academic disciplines, different values & beliefs. As a metaphor, you don’t lose your own culture by just visiting another country. In fact, as well as learning something new about another culture, you might even learn something about your own culture you never realised before, just by looking at it from another place.

SE: What are you most worried about? Most hopeful?

PF: Perhaps one of the things I feel most sad about is the loss of people’s ability to listen to each other generally. I don’t want or expect everyone to think the same or even like each other, but different points-of-view and disagreement is essential for progressing knowledge and learning.

If everyone is shouting at each other but nobody is really listening it seems, I don’t feel that helps anyone, and I feel much of the suffering in the world is coming from this, as well as a lack of understanding and learning. We have some really difficult challenges, including how we deal with AI, what is a good way to approach it?

One of the most valuable things I learned from my personal development training (and am still learning) is the power of listening. I have to admit, before this I considered myself quite good at debate and argument, and seeing it as something to ‘win’ over others, and if I listened it was more to get info to help improve my own points to win them over and beat the other person, like a contest.

When I look back now, I feel one of the reasons for this is I didn’t want to look weak, and I was afraid of being intellectually bullied perhaps, afraid of looking wrong or being wrong, or afraid of being forced to do something I didn’t agree with.

What I learned from my personal development training is listening to others isn’t surrender, nor does not it mean that you agree with that person.

This is probably obvious to many people, but what I was surprised to discover was how people respond when you really listen to them, and show them you are listening to them, people seem to change when they feel heard.

What I was surprised to discover was when another person feels really heard, they are far more open to really hearing you. That was one of the most valuable things I learned from that training, and I try to apply it to everything now.

So what I feel most worried about is people not listening to each other, but what I am most hopeful for is that its not that hard for us to start listenning to each other, any of us can do this, and the positive effects can be huge is what I see. I feel we need this for us to better navigate the challenegs of AI and many other things, we need to really listen and hear different perspectives, more than we do.

SE: Beyond our great first discussion (!) how is your interview series going? What have you learned so far?

I have about 20+ guests lined up from all over the world, of different ages, and from different backgrounds.

So far I have had you, Carolyn (graphic artist using AI) Cyrus (Lawyer using AI) Mary (AI Consultant helping small businesses using AI) and Jonathan this week (Anthropologist take on AI). Each guest has had quite a different take, with some common issues in terms of AI ethics, but also different perspectives and insights.

What I like is these are voices not normally included in debates and discussions about AI, which are usually tech experts, I don’t think that’s healthy, everyone is going to be impacted by AI, why should only a small elite get a say?

In a couple of weeks I’m interviewing a Malaysian mum (with 4 kids) in Kuala Lumpur who taught herself how to use image-based AI to produce some amazing art and images in her publication on Substack. She has some interesting views about AI shaped by her unique culture and experience, including being a mother.

I’m proud to be including voices like her in my podcast, which I hope will give a broader view of how humanity feels about AI beyond the opinions of a few Silicon Valley tech bros from a narrow demographic.

SE: What does the future hold for your work?

I’m focussing on three things: free weekly newsletter and articles ,a podcast, and soon some online courses. I feel providing educational content to help people understand AI in plain English, that is technically accurate, accessible from anywhere in the world and at any time, would be very helpful for helping with my core mission.